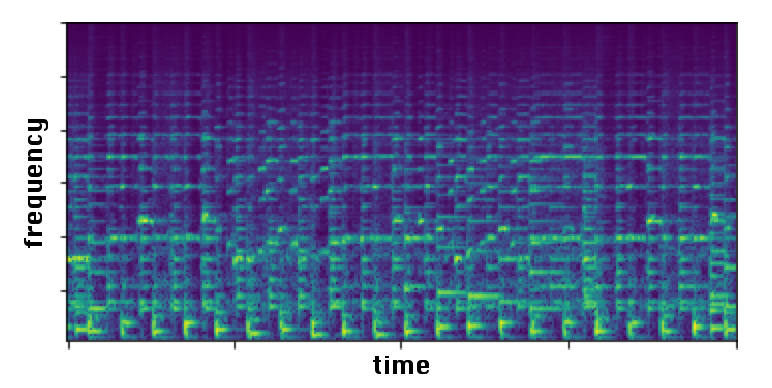

Music signals are difficult to interpret from their low-level features, perhaps even more than images. For instance, highlighting part of a spectrogram or an image is often insufficient to convey high-level ideas that are genuinely relevant to humans. In the following example of a spectrogram…

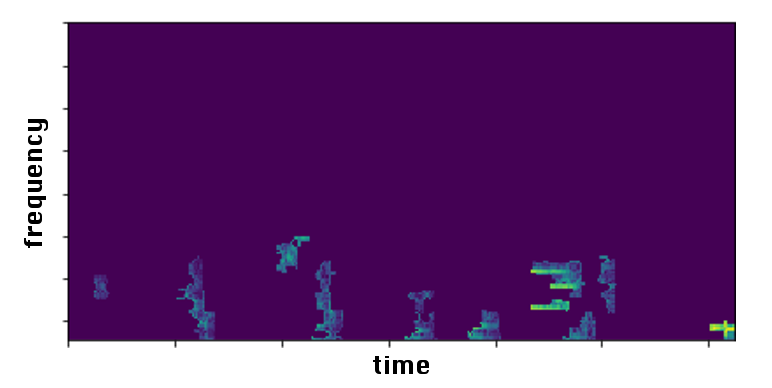

… let’s say that a given model estimated that the track exhibited a “happy” mood. Now, if we were to use a popular explanation technique – as LIME – to understand what makes this track “happy”, we would obtain something similar to the following spectrogram that zeroed out dimension with low attribution score:

These two images of a spectrogram and generated explanation were shamefully stolen from "Two-level Explanations in Music Emotion Recognition" V. Praher et al (2019) for demonstration purpose.

… which probably made you shrug since seeing bits of spectrogram does not enable you to understand the decision mechanism involved in estimating that a song is indeed happy. In some cases, working at the spectrogram level is sufficient, e.g., when predicting the presence of an instrument, we could expect the attribution map to highlight the target instrument melody. The problem is that sometimes, the space in which data is represented – to be consumed by a model that makes predictions, does not align with the space in which humans would best receive an explanation about it.

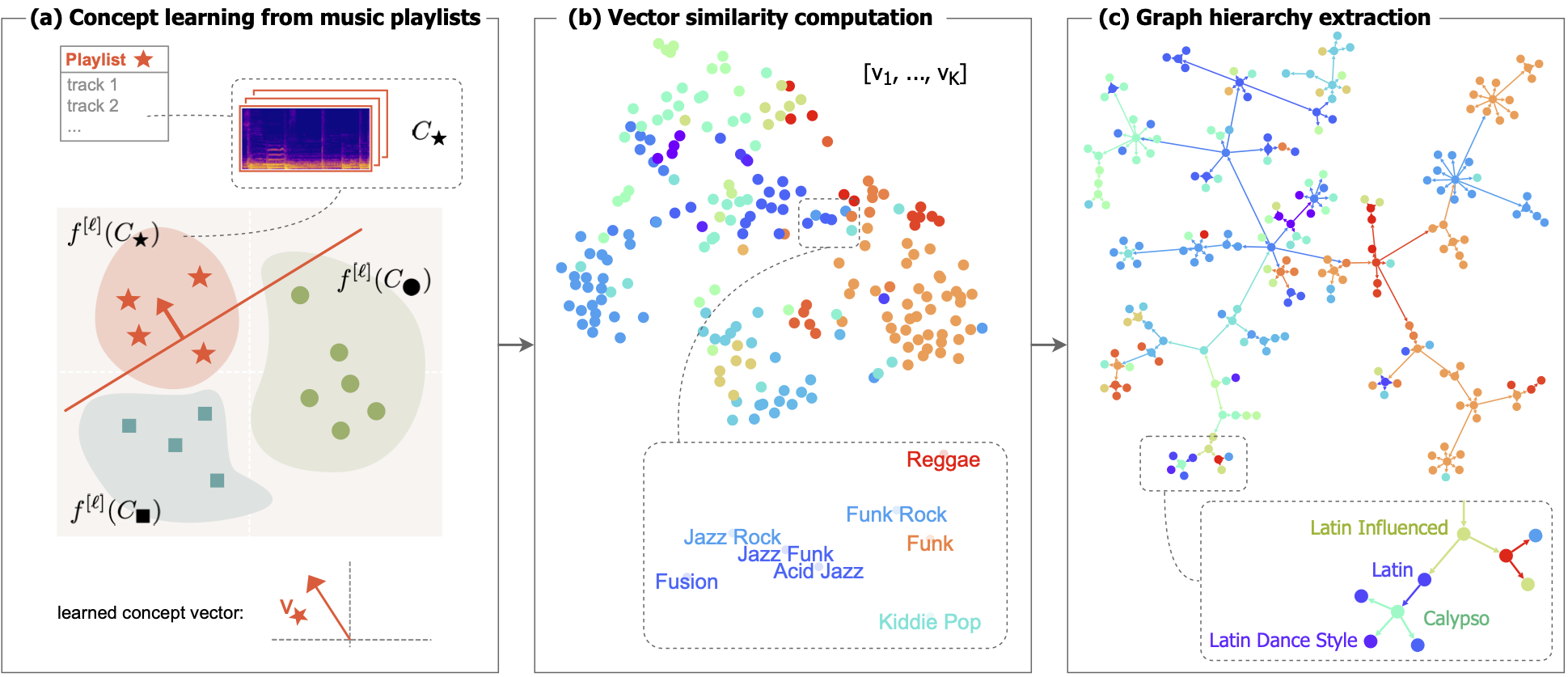

In the field of computer vision, concept learning was therein proposed to adjust explanations to the right abstraction level (e.g. detect clinical concepts from radiographs). These methods have yet to be used for Music Information Retrieval. In this paper, we adapt concept learning to the realm of music, with its particularities. For instance, music concepts are typically non-independent and of mixed nature (e.g., genre, instruments, mood), unlike previous work that assumed disentangled concepts. We propose a method to learn numerous music concepts from audio and then automatically hierarchise them to expose their mutual relationships. We conduct experiments on datasets of playlists from a music streaming service, serving as a few annotated examples for diverse concepts. Evaluations show that the mined hierarchies are aligned with both ground-truth hierarchies of concepts – when available – and with proxy sources of concept similarity in the general case.

Some demo results, are available online to play with: research.deezer.com/concept_hierarchy.

This paper has been published in the proceedings of the 23rd International Society for Music Information Retrieval Conference (ISMIR 2022).